The brain is capable of storing the computer equivalent of a petabyte, or 100 million gigabytes.1 That’s the equivalent of 4.7 billion books. It has 86 billion neurons, 400 miles of capillaries, 100 thousand miles of nerve fibers, and more than 10 trillion synapses.1 And yet, despite this, our memory feels as fragile as a butterfly in a hurricane. How exactly is it that we are theoretically capable of so much yet feel as though we can only remember so little? In this article, you’re going to learn about the current understanding of what memory is, how it’s stored and recalled, the intriguing phenomenon of forgetting, and how you can use all this information to improve your own memory.

What Even Is Memory?

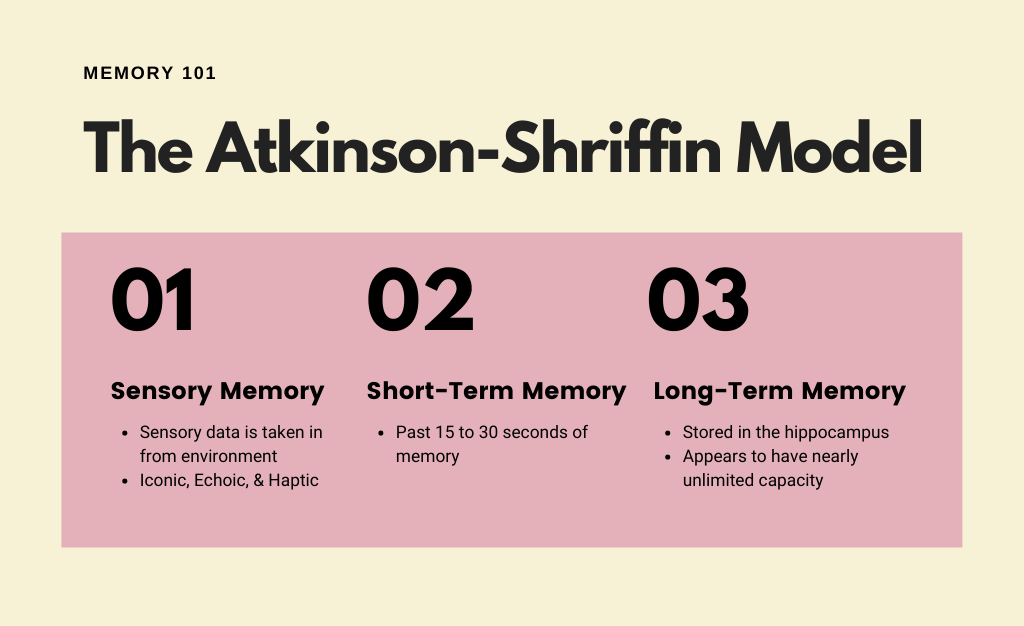

Unfortunately, the exact science of how memory works on the individual neuronal level is not currently understood. However, there is a good understanding of the general activity of different parts of the brain and there are a number of prevailing, intuitive theories on the high-level view of how memory works, the most prominent of which is the Atkinson-Shiffrin model.

The High Level Structure of Memory

The Atkinson-Shiffrin model begins by positing that the first stage of memory begins at the reception of sensory data.1,2 On this level, sensory information is aggregated from the environment and is briefly held in the mind. The three main categories in the current field of research are iconic, echoic, and haptic, which basically means that the sensory data studied tends to be through sight, sound, and touch.2

The next level is short-term memory.1 Short-term memory includes exactly what is in your mind at a given point in time, which is called working memory, and the traces or remnants of what was in your mind not too long ago, usually somewhere between 15-30 seconds prior.1,3

The final and most well-known level is long-term memory, which is what you usually think of when you think of a memory. The fascinating part is that, while it may not seem like it, long-term memory seems to have an almost unlimited capacity, which is something that we will dive deeper into in a bit.1

The Process Of Memory

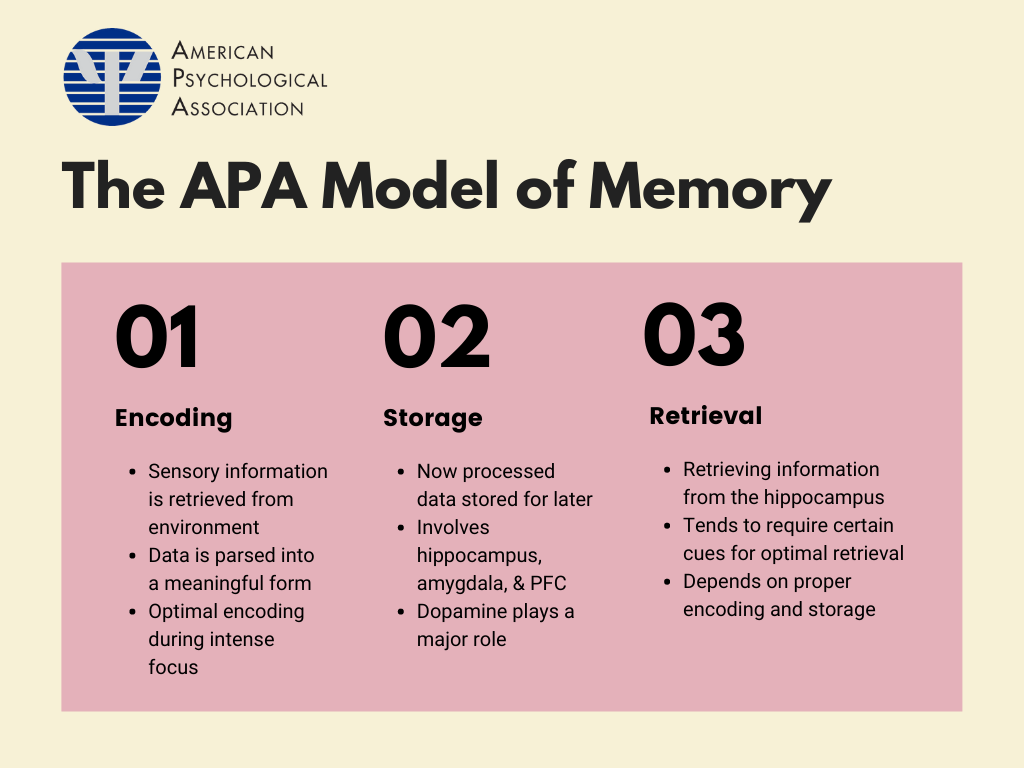

The Atkinson-Shiffrin model is useful for describing memory, but it does not really say much about how memories are formed and recalled. The American Psychological Association (henceforth called the APA) has created a model that describes current theory on how memories, specifically long-term memories, are formed. It is composed of three phases: encoding, storage, and retrieval.

Encoding

To begin with, information on the sensory level must be taken in by the sensory organs, whether it be words on a page or the party of a lifetime. However, we can’t simply have a recording of all of this information as a memory; it would be extremely inefficient to capture at the party every single square centimeter of vision, every sound, every sensation, every emotion, for even a second. This information must be filtered and parsed so that only the relevant things are even considered. When reading a textbook, you don’t need to remember the amount of white space on the page or the texture of the page; your brain learns to filter out this information and focus on the words. Not only that, but it focuses on the meaning of those words and how you actively processed their value at that moment instead of memorizing the words and their order.

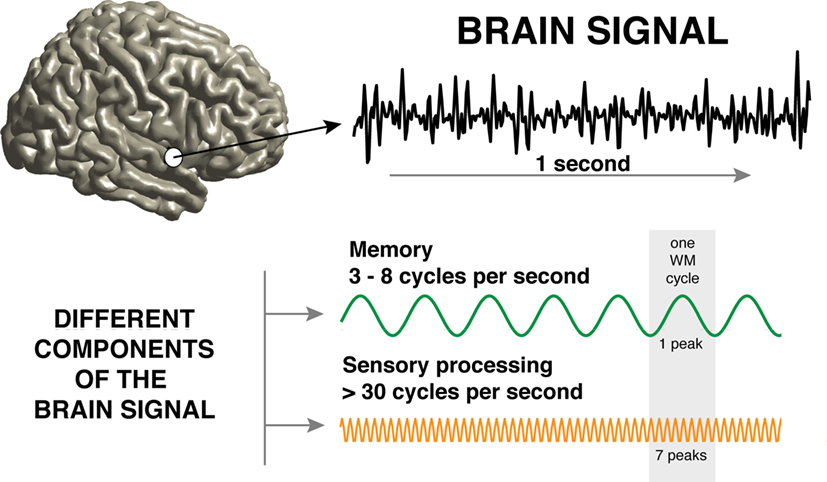

One major aspect of encoding is the idea of sensory processing working in tandem with working memory.3 When neurons are actively functioning, they send electrical currents between each other at rapid rates, with each active moment and subsequent inactivity being called a cycle, and this electrical activity can be measured. Studies have found that working memory tends to work at 3-8 cycles per second, whereas processing of vast amounts of sensory information can run at 30-100 cycles per second.3 Because both processes are occurring simultaneously, what the brain does is group the sensory cycles into chunks of about seven within each working memory cycle.3 In other words, each working memory cycle, which defines your short-term memory, contains about seven cycles of sensory information on average, which could explain the experimental discovery that people can only hold up to 5 to 7 things in their brain at a time.3 This helps to explain why “chunking” information is a popular study tip, for it works with the brain’s natural tendencies. By chunking information such as a phone number into smaller chunks together, the brain can simply remember chunks of information and associate them with each other rather than remembering the long string of information.

The idea that the strength of a memory is determined by the number of sensory cycles within each working memory cycle is exploited by the brain during moments of high concentration and learning. During this time, the brain slows down the frequency of working memory to around 3 cycles per second while maximizing sensory cycles.3 While this does not seem to increase the amount of information actually retained, it does appear that the increased sensory cycles per working memory cycle do increase the amount of sensory processing that can be done.3 This prepares the information to be stored in a more efficient way.

Storage

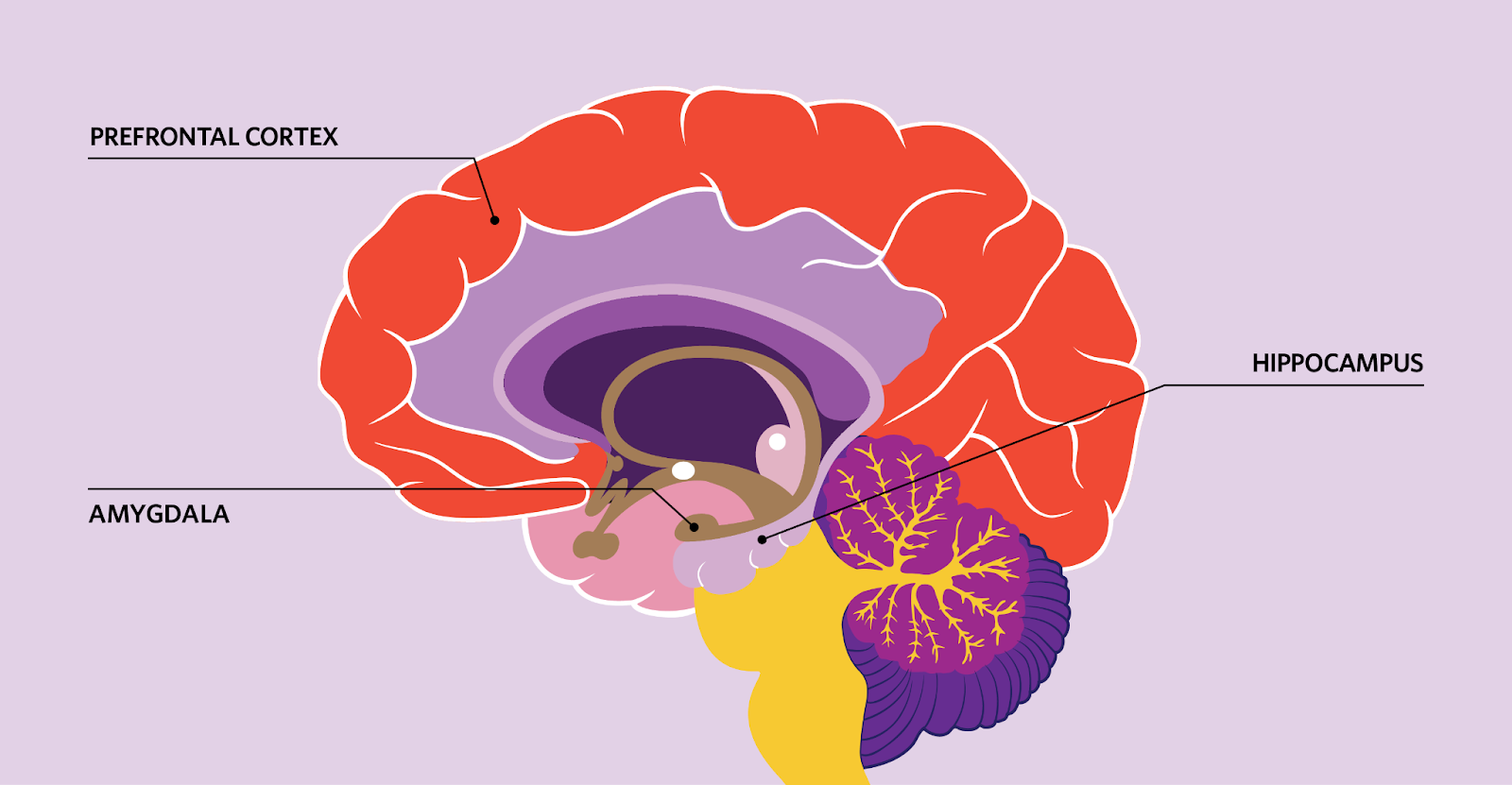

After the information has been parsed in a way that it can be stored properly, the APA’s theory continues by saying that this information must now be stored. Through fMRI (functional Magnetic Resonance Imaging) studies that measure the electrical activity of different parts of the brain, we’ve discovered that many different parts of the brain are involved in the process of memory, which include the hippocampus, amygdala, and prefrontal cortex.

While this is not fully understood, it seems that after sensory input has been processed, this information goes to the hippocampus, an area deep within your skull.4 At this point in time, which is usually less than a second after the sensory information was first received by sensory organs, the information is either categorized as positive, negative, or neutral.

When a memory is positive, dopamine is released, and this memory is “tagged” and will grow stronger with time given further dopaminergic repetitions.4 Professor Charan Ranganath, a neuroscientist at the UC Davis Center for Neuroscience, published a paper concerning the relationship between reward and memory in 2011. Ranganath theorized that, given the small proportion of detailed memories a person has of a given day, the brain will likely “save those memories that might be most important for obtaining rewards in the future.”5 In his study, Ranganath and post-doctoral researcher Matthias Gruber definitively found that participants “were better at remembering objects that were associated with a high reward.”5

An unexpected yet interesting discovery of their research was that, while at rest, some subjects were replaying the rewarding memories in their mind, and the subjects who did do this demonstrated better recall of that information.5 Moreover, fMRI scans found that there were increased interactions between the hippocampus and the ventral tegmental area complex, an area of the brain heavily involved in reward processing.5 This further indicates that reward was responsible for the improved recall of memory.

On the other hand, memories that are deemed as negative tend to correspond with increased activity in the amygdala, which is the area of the brain most associated with emotion.5 This indicates that experiences associated with negative results are stored on a more instinctual and limbic level. For instance, when a person touches a hot stove, they quickly learn from this negative experience and instinctively know to not touch fire again. They don’t have to think about a specific experience to want to avoid the fire; they simply avoid it.

Neutral experiences tend to not be remembered as well simply because no reward was gained from anything in that experience, and nothing caused enough negative emotion to warrant avoidance.

Retrieval

The final step of forming memory according to the APA is retrieval.1 While not strictly part of the process of forming memory, it is still a critical part of understanding memory. During retrieval, a memory, usually long-term, is brought to conscious awareness in the mind. Repeated retrieval is usually what is necessary to cement a memory into the true long-term definition that most people understand.

Forgetting

With this understanding of how memory is formed, we can now begin to formulate an understanding of how forgetting works. Again, there is no definitive scientific understanding of forgetting, but there are a number of theories as to why and how forgetting occurs.

Why Do We Forget?

The brain is only capable of doing so much at a time, and for the majority of human existence, the brain could not simply take its time in making a decision. A lot of the time, the speed with which a decision, especially a prediction, could be made meant the difference between life and death. Stanford Associate Psychology Professor Anthony Wagner stated the importance of forgetting after publishing research in 2007 on the role of the prefrontal cortex in memory: “What forgetting does is allow the act of prediction to occur much more automatically, because you’ve gotten rid of competing but irrelevant predictions.”6 This explains the previously mentioned idea that the brain’s capability for memory seems limitless; the brain simply obstructs paths to predictions and memories that are not deemed as useful so that it can be more efficient at coming to a conclusion.

So how does the brain determine what memories are more important? Wagner claims, “Any act of remembering re-weights memories, tweaking them to try to be more adaptive to remember something. The brain is adaptive and one feature of that is not just strengthening some memories but also suppressing or weakening others.”6 In other words, the prefrontal cortex makes more important memories more accessible so that the brain’s computing power can be used more efficiently. This makes sense, as the experiences that are repeated tend to be the ones that are most strongly ingrained within us.

How Do We Do It?

Many of the prevailing theories point toward errors in the process of encoding, storing, and retrieving that may cause what we call forgetting.

Beginning with encoding, one theory is that memories can be “forgotten” if the information was not properly processed or even was received at all.1 In other words, if a person is not fully concentrated or aware, the information may not even be stored in the brain in the first place. This is like when you read a paragraph and have to continually reread it because, while your eyes are scanning the words, you are not properly processing this information to be properly stored!

Moving on to storage, there are a number of different theories. The first is that, even if the information is properly processed and ready to be stored, it’s possible for the information to not be stored because your brain has to move on to processing new information immediately!7 In a study by Michaela T. Dewar, Nelson Cowan, and Sergio Della Sala in 2007, it was found that “forgetting can be induced by any subsequent mentally effortful interpolated task, irrespective of its content.”7 In other words, consecutive activities that require high computational effort can cause you to not be able to store the preceding memory. There is also the “Interference Theory,” where similar memories can interfere with each other.1, 7 In retroactive interference, the storage of new information comes at the cost of conflicting and superseding old, similar information that had been previously stored; proactive interference is the opposite, where new information cannot be remembered because of how cemented old, similar memories are.1

To better illustrate interference theory, here’s a common example. If a website makes you create a new password that complies with their new guidelines of two and a half special characters and at least one negative number, you may focus extremely hard and be able to actually cement this new password into your mind. However, in the process, you knock that old password out of your mind. This would be retroactive interference. If you couldn’t remember the new password and could only remember the old password, that would be retroactive interference.

Finally, there can be errors with retrieval, specifically retrieval cues.1 When you are trying to recall a memory, there are certain ideas or things that can bring that memory to mind. For instance, the scent of some dish can remind you of food in your hometown. Retrieval cue issues are when the memory cannot be recalled with the appropriate cues. For example, if you couldn’t remember the name of some dish you saw on someone’s Instagram, but you remembered knowing it as soon as you see the actual name, that would likely be a retrieval cue issue.

What Do I Do About This?

From this understanding, a number of different ways to improve memory can be realized:

- Since working memory automatically chunks sensory experiences into groups, you can take advantage of chunking information and then associating them relative to each other rather than trying to remember the information linearly.

- Focus is extremely important when it comes to actually intaking and processing information. Regardless of how smart you may be or how long you study, lack or diffusion of your attention will prevent you from properly forming memories. So no multitasking!

- Since dopamine is directly correlated with better recall, adding a dopaminergic aspect to studying or learning can make memories stick in better. This could be implemented by treating yourself after a study session or using the Pomodoro technique to consistently implement breaks and reward into your studying.

- Because neutral experiences tend to not be remembered as well, try your best to spice it up and include novelty in your studying so that the experience stands out and the memories can be stored.

- Understanding retrieval cues can be extremely important for studying. You can actively create cues that will likely be helpful for the test rather than simply have your brain implicitly produce them for you. For instance, you could actively associate the word “lipid” with “nonpolar hydrophobic carbon-based molecule” and a multitude of other pieces of information with it so that on the test, each key word brings up the relevant information

Conclusion

While there were a lot of different ideas covered in this article, this is only scratching the surface of what we know about the brain and memory. As we discover more and more about the brain, we can begin to make more educated choices in terms of how we study, how we avoid forgetting, and so much more! Ultimately, it’s up to you to take into your hands your learning and memory. Start with this: How much of this article do you remember? And what could you have done better to remember more of it?

Works Cited

- https://www.purdueglobal.edu/blog/student-life/everything-about-memory/

- https://www.frontiersin.org/articles/10.3389/fphar.2017.00438/full

- https://kids.frontiersin.org/articles/10.3389/frym.2016.00005

- https://kids.frontiersin.org/articles/10.3389/frym.2014.00023

- https://www.ucdavis.edu/news/memory-replay-prioritizes-high-reward-memories

- https://news.stanford.edu/pr/2007/pr-memory-060607.html

- https://www.ncbi.nlm.nih.gov/pmc/articles/PMC2644330/

Images Cited

- https://unsplash.com/photos/OgvqXGL7XO4 (Used in Featured Image)

- https://kids.frontiersin.org/articles/10.3389/frym.2016.00005

- https://www.the-scientist.com/infographics/infographic-what-social-isolation-can-mean-for-the-brain-67706