New Study from the University of Tokyo Uncovers Unexpected Similarities Between AI Chatbots and Human Language Disorders

A pioneering study conducted by the University of Tokyo has revealed surprising similarities between the functioning of large language models (LLMs) and the brain activity patterns seen in individuals with receptive aphasia—a language disorder that affects comprehension. Released on May 14, 2024, in the journal Advanced Science, this research could lead to significant advancements in both artificial intelligence and the diagnosis and treatment of language-related neurological disorders.

AI “Hallucinations” and Human Aphasia: An Intriguing Connection

Contemporary AI chatbots driven by LLMs—like GPT-2, ALBERT, and Llama—are acclaimed for producing coherent and fluent responses. However, these systems can also exhibit “hallucinations”: confidently articulated outputs that may include factual errors or invented information. While typically deemed a flaw in AI design, researchers at the University of Tokyo observed that this peculiar characteristic mirrors patterns identified in human language disorders.

Specifically, this phenomenon corresponds with behaviors observed in individuals affected by Wernicke’s aphasia, a category of receptive aphasia. Those with this condition often speak fluently, yet their speech may lack logical coherence or appropriate word usage—resulting in sentences that are grammatically correct but lacking semantic validity.

Chief researcher Professor Takamitsu Watanabe from the International Research Center for Neurointelligence at the University of Tokyo emphasized the striking similarity: “My team and I were particularly struck by how this behavior resembles that of individuals with Wernicke’s aphasia, where such individuals can converse fluently but do not always make much logical sense.”

Energy Landscape Analysis: Mapping Thought Processes

To delve deeper into this connection, researchers utilized a technique called energy landscape analysis. Initially formulated in physics and now applied in neuroscience and AI research, this method creates a visual representation of how systems—be they biological or artificial—shift between various internal states.

In layman’s terms, energy landscapes can be likened to topographical maps, where distinct locations symbolize possible states of brain activity or AI computation. A neural state, whether in a human brain or an LLM, can be imagined as a ball moving along this landscape. Smooth valleys signify stable, consistent states, while scattered hills and shallow areas indicate erratic or unstable processing.

Their examination uncovered that both LLMs and individuals with receptive aphasia shared remarkably similar traits—most significantly, what researchers noted as “polarized distributions” in the way internal states transitioned and stabilized. This encompassed:

– Bimodal transitions in activity that appeared to oscillate between two extremes

– Extended “dwell times” in specific cognitive or computational states

– Unusually high Gini coefficients—a statistical indicator of inequality—linked to poor comprehension or diminished output coherence

These observations imply that both LLMs and aphasia-affected individuals may face systemic vulnerabilities that impede their capacity to extract meaning from or communicate information accurately.

Key Study Takeaways

– Four models—ALBERT, GPT-2, Llama-3.1, and a variant tailored for Japanese—showed internal patterns reminiscent of receptive aphasia.

– The researchers evaluated energy dynamics across these AI systems alongside brain scans from stroke patients with four types of aphasia and healthy control subjects.

– Variations in energy landscapes allowed for the differentiation of various types of aphasia based on internal neural transitions rather than merely external language behavior.

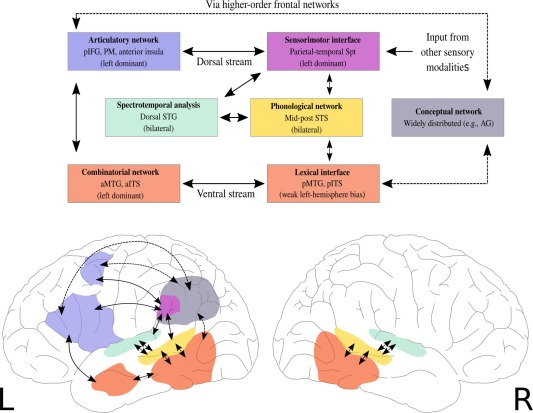

– The study utilized resting-state fMRI data to pinpoint core neural network patterns linked to different aphasia disorders.

Implications: Enhancing AI and Advancing Medical Diagnostics

The findings carry significant implications for both technological and medical fields.

In neuroscience and clinical practices, the study presents a novel framework for comprehending and categorizing language disorders. Traditionally, diagnosis relies heavily on behavioral evaluations (e.g., language tests or functional capabilities), but mapping internal network dynamics could allow for earlier and more accurate identification of various subtypes of aphasia.

For AI developers, this research sheds light on why sophisticated language models might still struggle to provide accurate and meaningful outputs, despite their fluency. It suggests that some generative errors may arise not solely from inadequate training data or model adjustments, but from inherent structural dynamics within the models themselves.

“The analogous internal behavior we’re observing could indicate cognitive rigidity,” remarked Watanabe. “We’re not implying that chatbots experience brain damage, naturally—but they might be affected by a sort of internal inflexibility that reflects human deficiencies.”

Future Directions: Connecting Cognitive Science and AI

While these parallels are compelling, the researchers urge caution against sweeping generalizations. The human brain is significantly more complex and adaptable than any existing AI, with language disorders stemming from intricate interactions across various brain regions and functions.

Nonetheless, this study paves the way for promising interdisciplinary research. As LLMs increasingly integrate into customer service, education, and mental health applications, ensuring their reliability and comprehending their limitations becomes more vital than ever.