Robots Acquire the Ability to “Feel” Like Humans, Thanks to a New Breakthrough from MIT and Amazon Robotics

In a remarkable advancement towards human-like robotic intelligence, researchers from MIT, Amazon Robotics, and the University of British Columbia have pioneered an innovative method that allows robots to ascertain the characteristics of objects simply by holding and manipulating them—without the need for cameras or specialized external sensors.

This system empowers a robot to recognize an object’s weight, softness, or internal structure by simulating a tactile experience via its built-in movement sensors. Similar to how a human may shake a closed box to infer what it contains, these machines leverage their own joint mechanisms to gather insights about the objects they engage with.

Drawing Inspiration from Human Touch

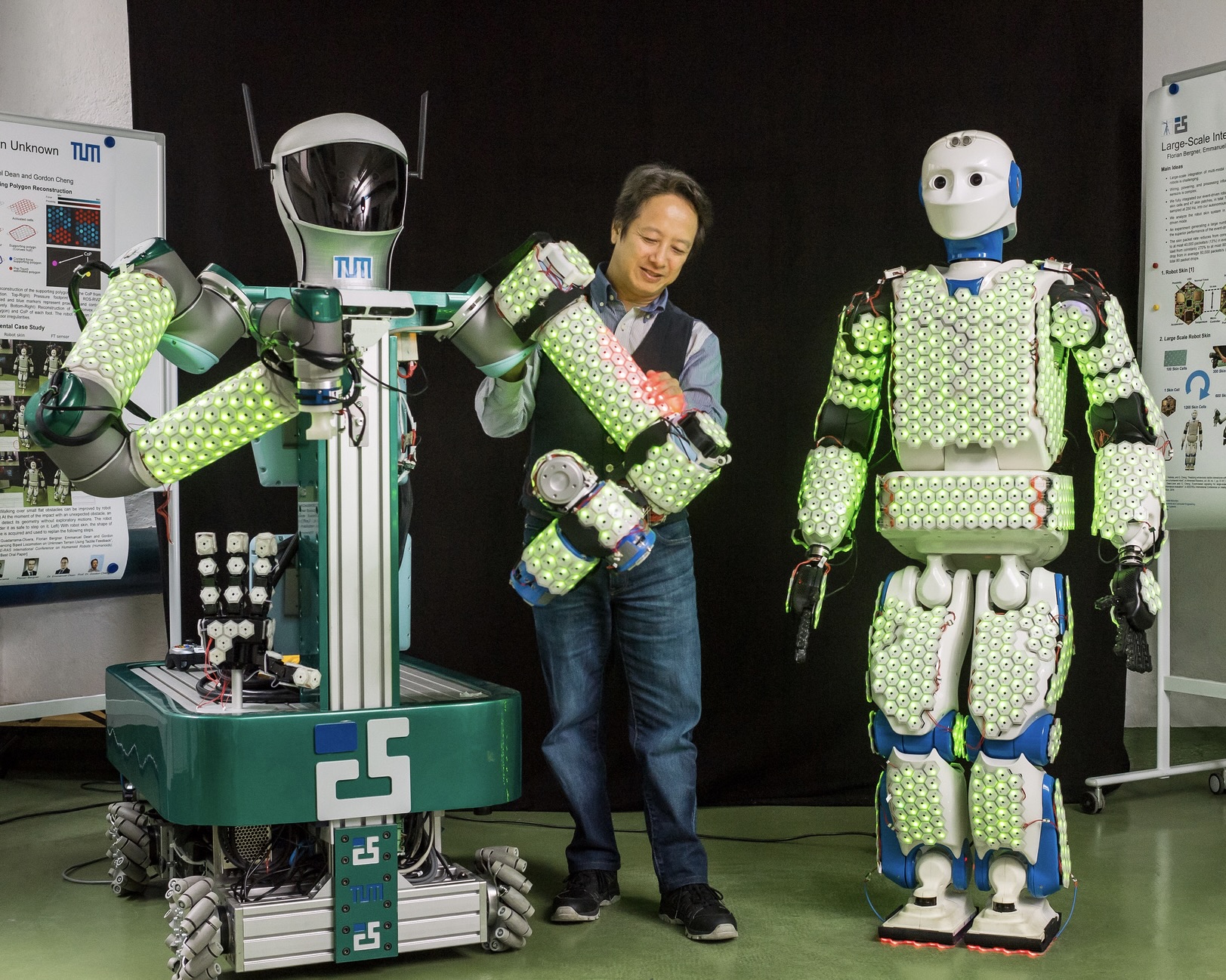

Humans heavily depend on touch—referred to as proprioception—to perceive the environment. We naturally assess an object’s weight, texture, and toughness by the way it resists or surrenders to our grasp. Traditionally, robots required external instruments such as cameras or force sensors to replicate this ability.

“A human doesn’t need incredibly precise measurements of the angles of our finger joints or the exact torque applied to an object, but a robot can. We capitalize on these capabilities,” noted Chao Liu, a postdoctoral research associate at MIT involved in developing the system.

This approach utilizes proprioception in robotics: the robot’s cognizance of its own body and movements. The internal sensors in a robot’s joints capture minute forces and positions during engagement. This information alone suffices for the robot to evaluate and comprehend the physical attributes of the object it is handling.

Digital Twins: The Technology Underpinning the Touch

Central to the system is a robust computer-based model known as a “digital twin.” This virtual representation reflects both the robot and the object it is examining. As the actual robot interacts with the object, the digital twin mimics the same actions in a simulated environment.

The software employs a technique known as differentiable simulation, which enables it to assess how slight variations in the object’s perceived properties (like weight or softness) would affect the robot’s movements. By juxtaposing simulated outcomes with real-world data, the program fine-tunes the digital model until it accurately captures real-life behavior.

“Having an exact digital twin of the physical world is crucial for the success of our technique,” clarified Peter Yichen Chen, the lead author of the research paper.

This enables researchers to deduce the properties of an unknown object simply by monitoring how the robot’s joints respond while manipulating it.

Real-World Applications: From Warehouses to Disaster Areas

This innovative methodology proves especially beneficial in conditions where vision-based systems struggle—such as poorly lit environments, smoky or dusty situations, or chaotic disaster zones. It provides a cost-effective and reliable alternative since no external sensors or cameras are necessary; only the sensors already present within the robot are utilized.

In experimental scenarios, the research team observed that robots employing the new system could:

– Accurately assess the weight of various objects with errors as minimal as 0.002 kg

– Identify material softness or firmness

– Infer the contents of sealed containers

This has significant implications for warehouse automation, search-and-rescue missions, and domestic robotics. By enabling robots to recognize and handle different materials appropriately without visual input, they become far more versatile and safer.

“This concept is broad, and I think we are just beginning to explore what robots can learn in this manner,” stated Chen. “My aspiration is to see robots venture into the world, touch and move things within their surroundings, and independently deduce the properties of everything they come in contact with.”

Future Directions

Moving forward, the researchers intend to enhance the system’s capabilities by incorporating visual data from cameras alongside proprioceptive knowledge. This combined approach could yield even more accurate results, facilitating quicker learning and more complex interactions for the robot.

Another challenge looming ahead involves managing objects with inconsistent characteristics, such as liquids, granular materials like sand, or items with internal moving parts. The research team remains hopeful that, with additional data and modeling advancements, the technology will continue to progress.

“This research is groundbreaking because it demonstrates that robots can reliably ascertain attributes like weight and softness using solely their internal joint sensors, without relying on external cameras or specialized measuring tools,” remarked NVIDIA researcher Miles Macklin, who was not part of the study.

A Touching Tomorrow for Robotics

As robots become increasingly prevalent in diverse settings such as our homes, factories, and unpredictable environments, the capacity to “feel” and comprehend the physical world solely through touch will be vital. This study moves machines one step closer to operating with the tactile sensitivity and adaptability characteristic of humans.

Bolstered by developments in machine learning, digital simulation, and mechanical engineering, this innovation holds the promise of not just smarter robots—but also more intuitive and responsive machines that can interact safely and effectively within their surroundings.